The global technology landscape in 2026 is being reshaped not just by faster processors or smarter apps, but by a new generation of neural AI systems and immersive augmented and mixed reality (AR/MR) devices that blur the line between human intent and digital interaction. From companies launching deep-tech neural ecosystems to cutting-edge AR glasses finally entering consumer markets, the pace of innovation in this sector is accelerating rapidly, expanding how we work, play, and communicate.

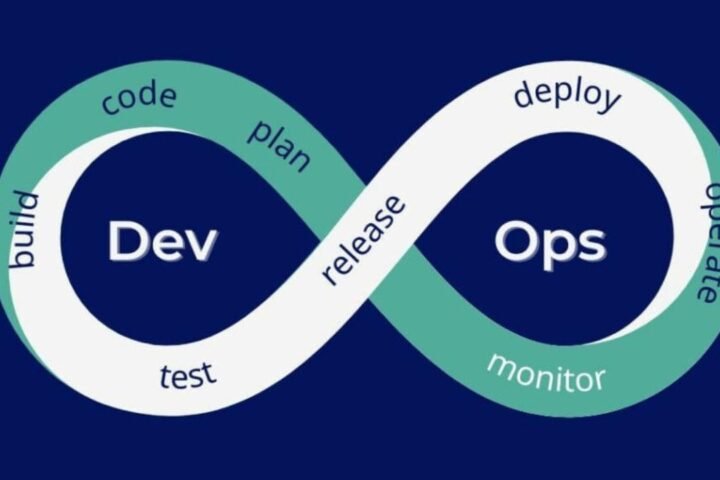

At the forefront of this trend is the recent launch of ai6 Labs by Wearable Devices Ltd., an ambitious neural AI ecosystem designed to translate human biological signals into actionable machine data without invasive sensors. This platform integrates advanced signal decoding via Electromyography with Mudra gesture technology, aiming to decode user intent into digital commands for real-time interaction. By combining research, commercialization, and rapid prototyping into a “closed-loop” pipeline, ai6 Labs aspires to accelerate the next generation of touchless computing interfaces that could revolutionize everything from smart wearables to extended reality systems.

This development comes as the broader AI-enabled wearables market is poised for massive growth, with industry projections forecasting exponential revenue increases over the coming decade as neural interfaces and gesture-based controls become more mainstream.

Parallel to neural AI advances, augmented reality hardware is transitioning from niche novelty to everyday reality. Established tech giants and new entrants alike are releasing AR and mixed reality devices that bring immersive experiences from concept to consumer. Apple’s Vision Pro mixed reality headset continues to refine how digital content blends with the physical world, using advanced spatial computing and gesture recognition to deliver intuitive interaction.

Alongside Apple, Meta’s Ray-Ban Display AI glasses — featuring a built-in high-resolution display that can overlay information like directions, translations, and messaging — are helping redefine smart eyewear as a practical tool rather than a futuristic gadget.

In the realm of extended reality, Samsung’s Galaxy XR headset runs the Android XR platform, designed to support a range of immersive applications from gaming and social interaction to virtual collaboration. This broad support for AR/MR content underscores how mixed reality is evolving into a versatile computing environment.

Market analysts confirm that AR, VR, and MR technologies are not just emerging trends but major contributors to global tech growth. The combined virtual and mixed reality market is projected to expand significantly as both consumer and enterprise adoption rises, with smart glasses and headsets becoming more accessible and capable.

This technological convergence — where neural AI meets immersive reality — is reshaping how devices interpret and augment human behaviour. Instead of relying solely on touchscreens or controllers, future human-computer interaction could be driven by subtle gestures, brainwave-informed intent, and spatial contextual awareness. These capabilities open new possibilities for hands-free communication, adaptive environments, and real-time overlay of digital content onto the physical world.

The implications extend beyond entertainment and gaming. In sectors like healthcare, education, manufacturing, and remote collaboration, AR/MR systems integrated with neural AI promise to enhance training, accessibility, and workflow efficiency. Devices that once felt like sci-fi prototypes are now becoming platforms for real-world applications — from gesture-controlled interfaces to wearable systems that personalize digital experiences based on user intent.

While challenges remain — including device cost, battery life, and ensuring intuitive user experiences — 2026 is shaping up to be a pivotal year for immersive tech. As major tech brands refine hardware, new startups push neural AI innovations, and ecosystems like Android XR mature, the dream of seamless interaction between humans and machines is coming into sharper focus.

The next wave of digital interaction won’t be defined by keyboards or touchscreens alone — it will be shaped by how effectively machines can understand and respond to human intent, overlay digital insights onto reality, and create experiences that are as natural as speaking, looking, or gesturing. That transformation is happening right now.